AI/ML Challenges

Commonly used embedded control processors lack compute resources needed to execute inference workloads efficiently.

Dedicated accelerators can work well for CNN layers, however:

- They may not work for modern neural networks

- They can be difficult to program, may have immature tools

- In-house solutions may be expensive to maintain

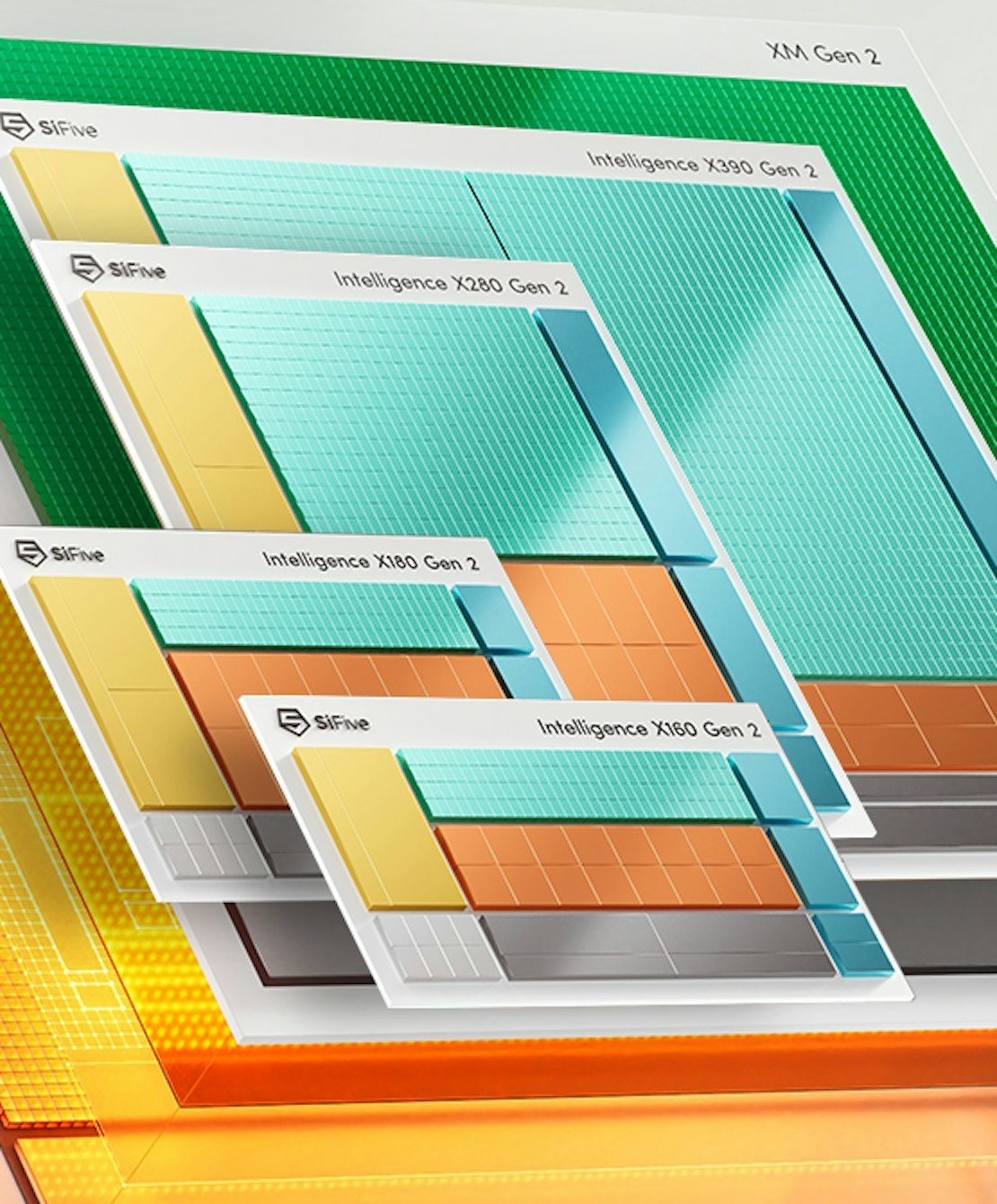

The SiFive Intelligence™ solution is a better option: A high-performance control processor with scalable vector compute resources, all based on an open instruction set. SiFive Intelligence is a scalable platform to meet ML processing requirements, from extremely low power to high-performance compute.

The SiFive Solution

SiFive Intelligence is an integrated software + hardware solution that addresses energy efficient inference applications. It starts with SiFive’s industry-leading RISC-V Core IP, adds RISC-V Vector (RVV) support, and then goes a step further with the inclusion of software tools and new SiFive Intelligence Extensions, vector operations specifically tuned for the acceleration of machine learning operations. These new instructions, integrated with a multi-core capable, Linux-capable, dual-issue microarchitecture, with up to 512b wide vectors, and bundled with IREE based AI/ML reference software stack, capable of compiling and running many possible models/frameworks, they are well-suited for high-performance, low-power inference applications.

Standalone Vector CPU

Intelligence cores are very capable embedded CPUs on their own

The vector engine provides data processing abilities enabling filters, transforms, convolutions and AI inference without additional processing elements

Reduces hardware complexity and cost

Provides simple software model

Accelerator Control Unit

Providing control functions for a customer designed accelerator

Allows the customer to focus on the data processing capabilities of the accelerator rather than control and housekeeping

Vector engine allows complementary compute – pre-/post- formatting of data or handling of corner cases